Policies, Frameworks & Blueprints

At Voice For Change Foundation, our initiatives go beyond advocacy — they provide actionable blueprints for how AI can be developed and governed responsibly, ensuring that technology strengthens workers, communities, and the future of our society.

-

AI-informed pathways to support the Federal Reserve’s 2% inflation goal by balancing monetary tightening with real-time labor-market signals on employment stability and growth.

This analysis synthesizes publicly available labor-market data, longitudinal job-market signals, and anonymized qualitative observations using AI-assisted pattern recognition, with all interpretations subject to human review and intended to complement—not replace—official economic statistics.

-

Purpose

The Economic & AI North Star defines why the United States is adopting AI—and what success looks like beyond GDP. It anchors all downstream policies to a single principle:

AI must expand economic opportunity, preserve human dignity, and strengthen national resilience—not merely increase corporate efficiency.

Core Objectives

Maintain U.S. leadership in AI innovation

Protect and evolve the American workforce (white- and blue-collar)

Restore trust in institutions, hiring systems, and markets

Use AI productivity gains to stabilize long-term public finances

Non-Negotiables

Humans remain accountable for consequential decisions

Economic gains from AI must be broadly shared

No innovation without safeguards in high-impact domains

This North Star governs every framework below.

-

What This Framework Does

This framework defines how AI is adopted responsibly across the U.S. economy—by businesses, governments, and institutions—before regulation even kicks in.

Core Pillars

1. Human-in-the-Loop by Design

AI may assist, recommend, or optimize—but humans retain decision authority in:

Hiring and termination

Credit, housing, insurance

Healthcare decisions

Public benefits and law enforcement

2. Explainability as a Trust Requirement

If an AI system affects a person’s livelihood, health, or rights:

The outcome must be explainable in plain language

Reason codes must be accessible

Appeals must route to a human

3. Proportional Safeguards

Low-risk AI: disclosure + privacy hygiene

Medium-risk AI: transparency reports + periodic review

High-risk AI: audits, monitoring, human oversight

4. Incentivized Responsibility

Compliance is rewarded through:

Procurement preference

Tax credits and grants

Public certification labels

This framework prepares the ground for regulation—so adoption doesn’t stall or backfire.

-

The National Rulebook

Purpose

FAIGA is the binding federal backbone that prevents regulatory chaos while protecting innovation.

Institutional Design

Federal AI Regulatory Agency (FARA)

Independent or meaningfully insulated (FTC / Commerce-aligned)

Coordinates with EEOC, CFPB, DOJ, HHS, DOT, DHS, DoD

Harmonizes with NIST technical standards

Risk-Based Regulatory Model

Risk Tier High

Examples Hiring, healthcare, credit, law enforcement, autonomous systems

Obligations

Pre-deployment impact assessments, audits, explainability, human oversight

Risk Tier Medium

Examples HR screening, logistics, large-scale advertising

Obligations Transparency reports, bias testing

Risk Tier Low

Examples Chatbots, recommenders

Obligations Disclosure + privacy safeguards

-

(National + State-Adaptable Layer)

Purpose

This framework closes the biggest gap in U.S. AI policy: deployment accountability, not research control.

Core Principle

If AI is allowed to act in the real world, humans must remain responsible.

Deployment Requirements (High-Stakes Systems)

Human authorization before consequential actions

Audit logs and traceability

Fail-safe defaults

Incident response obligations

Right to explanation and review

Texas as a Model (TRAIGA Phase II)

Texas demonstrates how states can:

Regulate deployment without chilling innovation

Exclude research, training, and open-source development

Focus on agentic systems that trigger real-world outcomes

FAIGA sets the federal floor.

TRAIGA-style laws become state-level accelerators.

-

A concise Texas-first legislative proposal for responsible AI deployment.

-

Stabilizing the Labor Market

Why This Is Critical

AI-driven hiring is now a systemic labor-market risk, not a tooling choice.

Core Guarantees

For Workers

Right to know when AI is used

Right to a reasoned explanation

Right to human review

For Employers

Skills-based hiring standards

Minimum human-review floors

Bias audits and retention of logs

For the Economy

Reduced false negatives

Faster re-entry for displaced professionals

Restored trust in hiring systems

National Policy Hooks

EEOC-aligned enforcement

NIST HR-AI standards

Procurement preference for compliant employers

This blueprint directly stabilizes white-collar re-entry and reduces long-term unemployment.

-

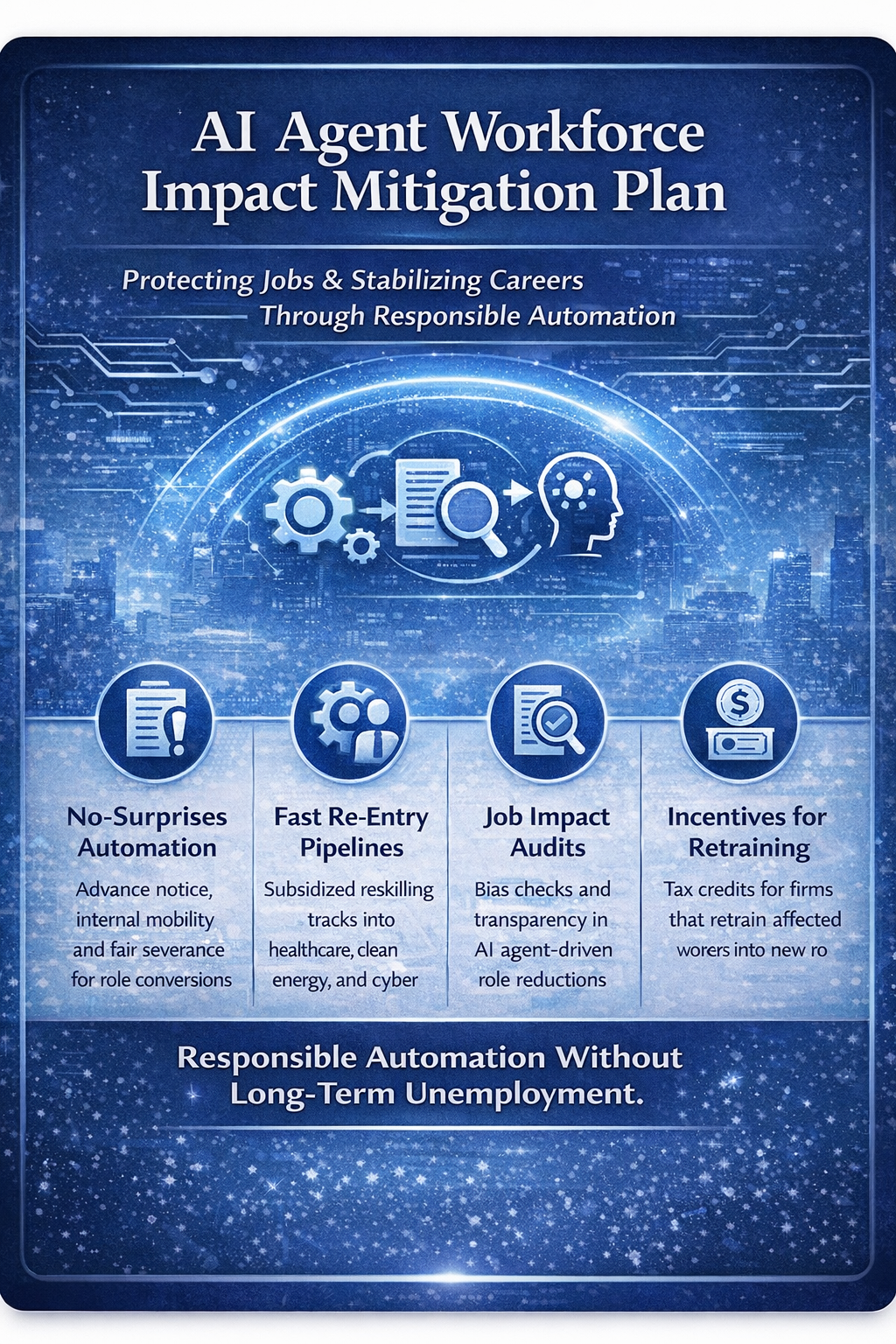

Corporate Obligation Layer

Purpose

This plan governs what companies must do when deploying AI agents that replace or radically restructure roles.

Key Requirements

No-Surprises Automation

Advance notice of role conversions

Internal mobility first

Redeploy-or-separate packages

Fast Re-Entry Pipelines

Healthcare

Clean energy

Cybersecurity

Advanced manufacturing

Incentive Alignment

Tax credits for retraining

Higher payroll taxes for mass displacement without mitigation

This is how automation becomes responsible instead of extractive.

-

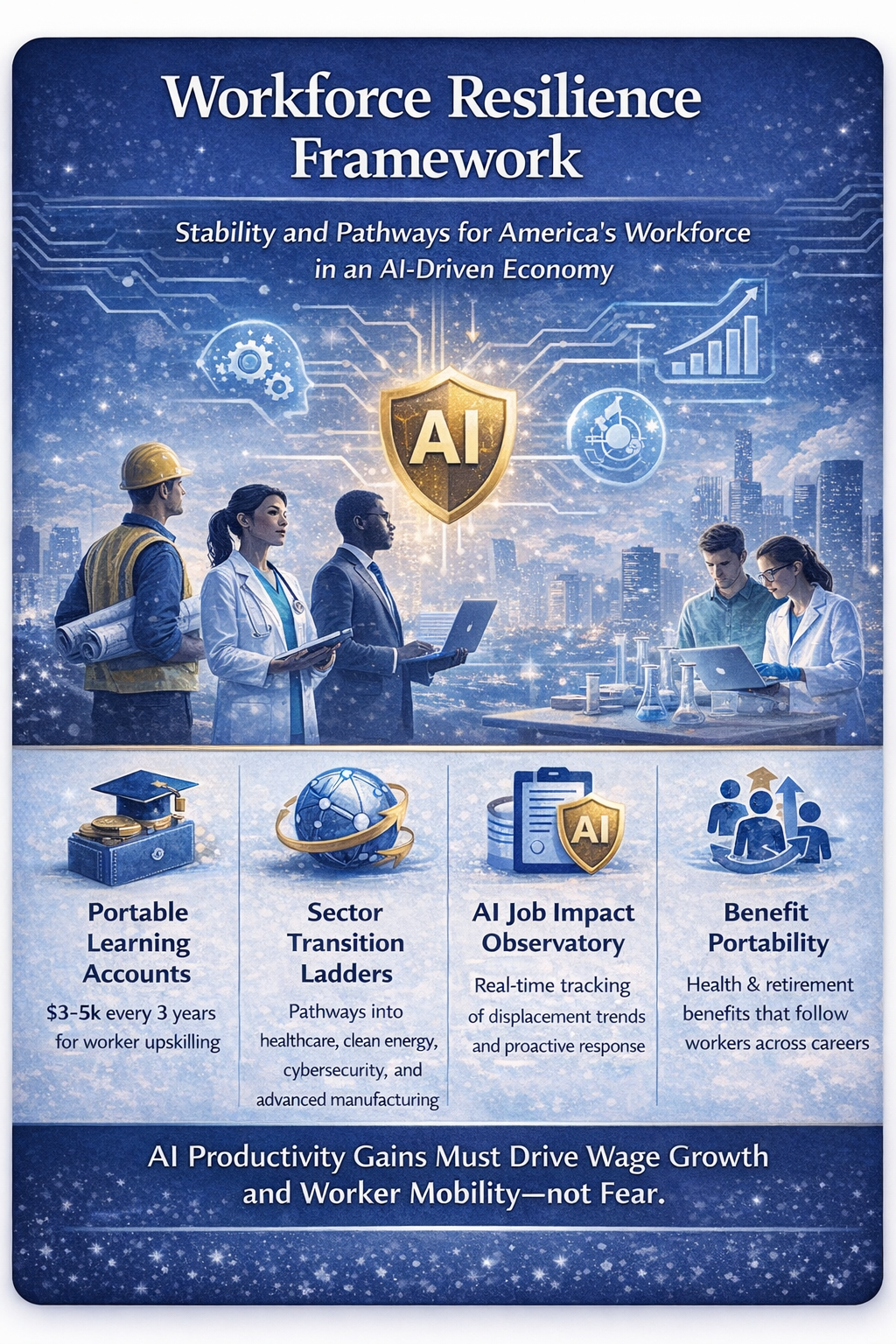

Government & Worker Support Layer

Purpose

This framework ensures workers thrive regardless of corporate behavior.

Structural Commitments

Portable learning accounts ($3–5k every 3 years)

National AI Job Impact Observatory

Sectoral transition ladders

Benefit portability

Result

AI productivity gains translate into:

Wage growth

Mobility

Reduced fear of innovation

-

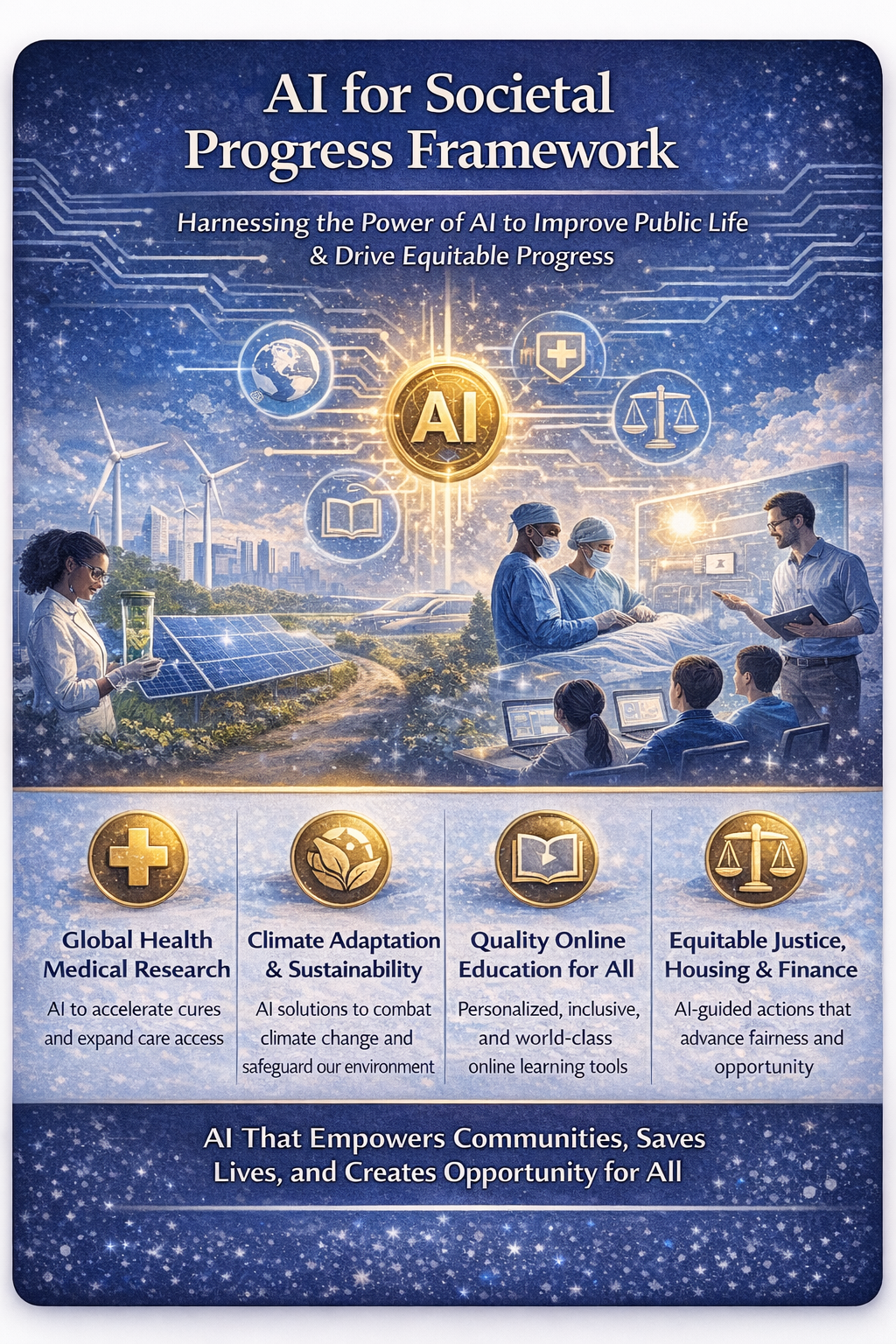

Public-Good Deployment

Focus Areas

Healthcare access

Climate resilience

Public-sector efficiency

Equity and inclusion

AI here is explicitly mission-bound to outcomes—not profit alone.

-

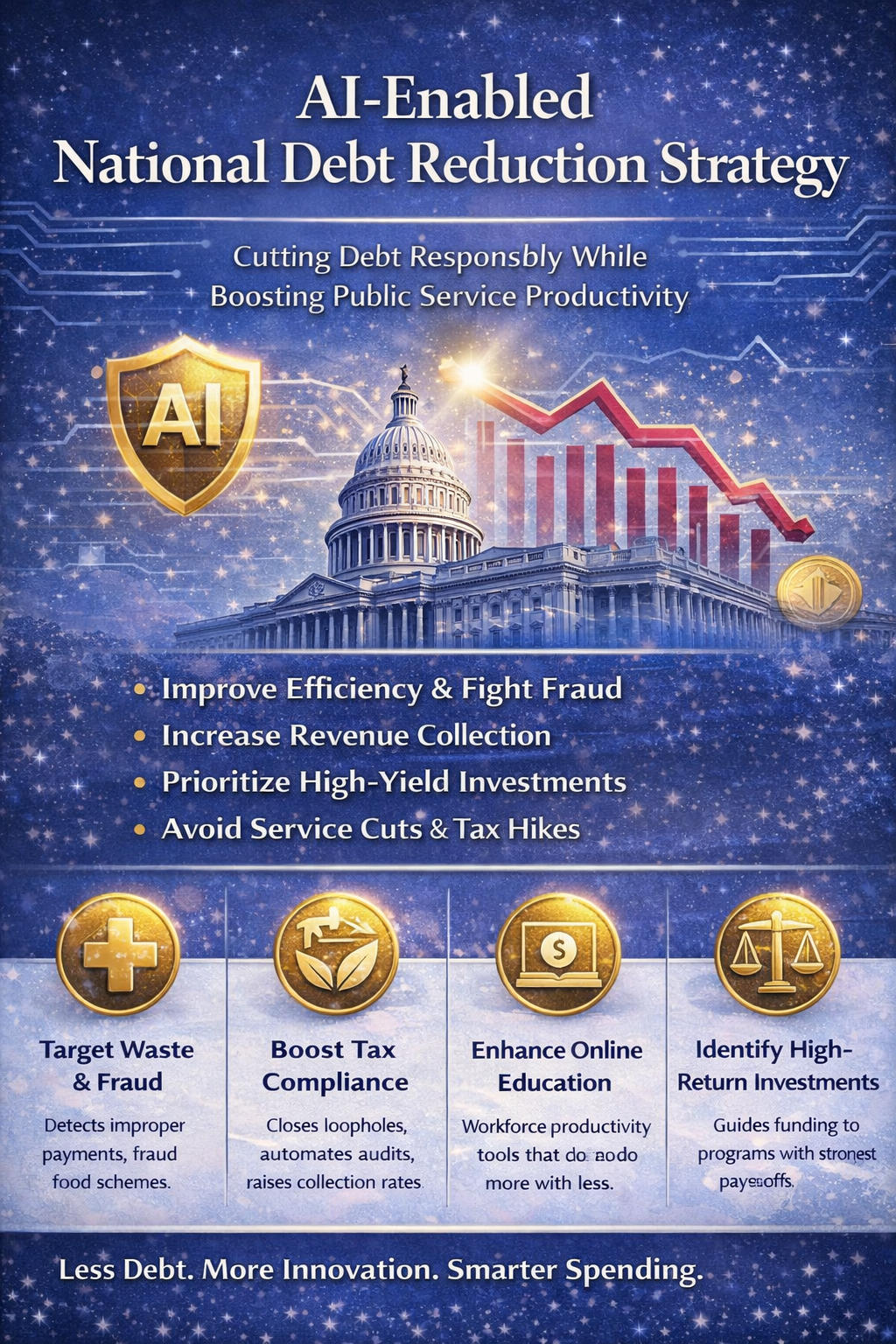

Fiscal Anchor

How This Connects Everything

AI helps:

Eliminate waste and fraud

Improve tax compliance

Optimize procurement

Reduce healthcare admin costs

Power climate-linked revenue

Critical Safeguard

Debt reduction cannot come from austerity or workforce erosion—it must come from productivity recapture.

-

(Social Equity & Automation-Era Resilience Side)

A data-driven, inflation-aware blueprint to transform AI-era productivity gains into a permanent living-wage floor — $1,500 per adult and $500 per child (2025$) — indexed to cost of living by 2032. This plan ensures every American shares in the wealth created by automation through a funded, fiscally responsible “AI dividend” that protects dignity, demand, and democracy.

-

A concise, non-binding informational submission supporting human-centric AI Act implementation in Europe through workforce-impact considerations informed by observed U.S. labor-market trends.

-

(climate & sustainability side)

AI-driven strategies to cut emissions, predict extreme weather, optimize renewable energy, and implement climate-smart revenue policies like carbon pricing.

-

(international governance side)

A collaborative charter proposed to the French Élysée and international partners to establish shared ethical principles for AI, emphasizing equity, sustainability, and human rights.

-

(energy & innovation side)

An AI-enhanced blueprint to accelerate nuclear fusion breakthroughs through advanced plasma modeling, materials science, and adaptive safety systems—paving the way for limitless clean energy.